Standard Lens Data in VFX Workflows

More recently, many cameras that have Cooke /i (or LDS)-enabled PL lens mounts have integrated this lens metadata stream into the camera’s SDI monitor outputs, obviating the need for a dedicated cable attached to the lens, and allowing for lens metadata to be embedded in, or associated with, a given clip file recorded to camera media. Then, in post-production — and especially useful in VFX production — the exact settings of a particular lens (its model and focal length, plus focus and iris settings for that particular shot) could be easily inspected by post personnel if, for example, it was necessary to virtually re-create a particular lens-camera combination for the purposes of 2D/3D compositing.

However, other critical aspects of how a particular lens “sees” have often have to be painstakingly re-created, more or less manually. For example, let’s say there’s a CG creature moving from left-to-right onscreen. Many lenses show subtle but noticeable “flaws” that are part-and-parcel of that lens’ character — how it “draws” an image onto film or a digital sensor. Chief amongst these are shading (often referred to as “vignetting” by DPs and camera assistants) and distortion characteristics (which can show as a barrel or pincushion effect — or both, especially where zoom lenses are concerned).

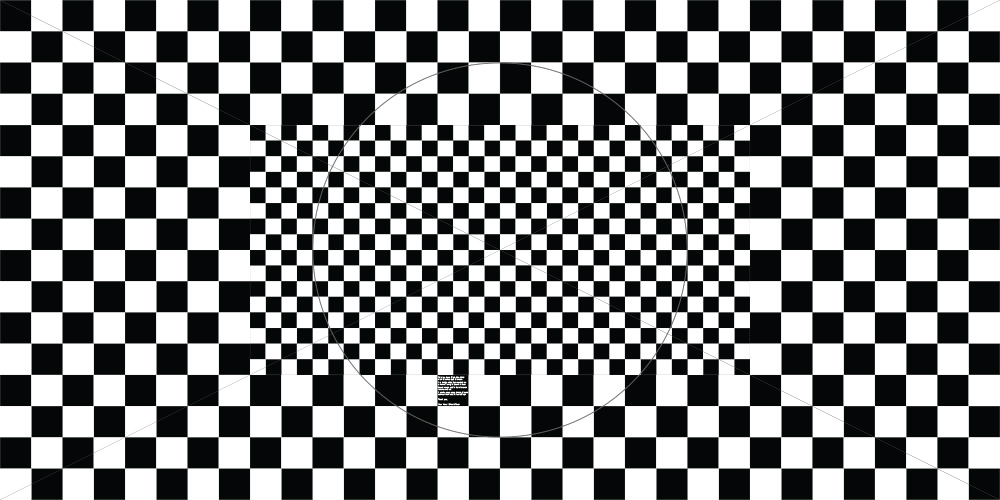

Re-creating these aspects of a lens’ “character” has long been a tedious chore for VFX artists, as they have to manually warp, and selectively darken, already rendered frames of CG animation to match the “plate” (the background image captured in-camera as a canvas for compositing foreground elements created in the computer). Accurately re-creating these lens characteristics helps to “sell” the notion that the composited element, such as a CG character, was really there in the scene at the time of principal photography. This has required the camera crew to (or to try to remember to ;)) shoot grid charts to assist VFX artists in the “mapping” of lens characteristics into the virtual environment. This often happens at checkout and so can add time (and stress) to a busy camera assistant’s already full prep day(s). These shots of grid charts are then mapped in post by VFX artists into a “mesh warp," allowing the lens’ characteristics to be applied to CG imagery during compositing.

Typical lens distortion grid chart, courtesy of Eric Alba.

ZEISS XD Streamlines the Post Process

Enter ZEISS’ new lens metadata protocol, ‘XD’ (for eXtended Data). Based on the Cooke /i metadata protocol, XD adds these important ‘dimensions’ to the metadata able to be transmitted to cameras and other peripherals, providing reference for both the shading (aka vignetting) and distortion characteristics of ZEISS’ latest designs: the CP.3 XD and Supreme Prime full format lenses. Each focal length of the CP.3 XDs, as well as the Supremes (the latter from a more specific given ‘batch’, denoted by the two-letter production code on the barrel) now provide a true stream of shading and distortion info — frame-by-frame — which can be saved with each take’s metadata on compatible cameras.

Which cameras you ask? As of today, all RED DSMC2 bodies running firmware v7.1.0.1 or later and, most recently, Sony’s VENICE when running the high frame rate-enabling 4.0 firmware. And since the shading and distortion data is embedded in the clip file itself, post/VFX will never have to track down the DIT or media manager from a given project to ask if the data was included with the off-loaded footage. For cameras that don’t yet support native capture of the new metadata format, ZEISS has worked with Ambient, makers of the Ambient MasterLockit Plus timecode generator, and Transvideo (using their StarliteHD-m display with ARRI cameras) to provide a compatible workflow for capturing and applying this metadata from most any camera.

ZEISS has also produced plug-ins for two of the most popular compositing environments used today for 2D/3D compositing, paint and rotoscoping work: Foundry’s Nuke and Adobe After Effects. With the appropriate plug-in loaded, the shading and distortion details from the camera-original files can be applied directly to a shot, or group of shots, being worked on by a VFX artist. Additionally, ZEISS has developed an ‘Injection Tool’, allowing VFX Technical Directors and Assistant Editors to re-embed eXtended Data into EXR framestacks (aka frame sequences) for use with the Nuke plug-in. Both the plug-ins and the injection tool are available as free downloads from the ZEISS website.

While some may ask “What took you so long?” — still photographers have had access to generic shading and distortion maps for popular lenses inside of programs like Adobe Camera RAW, Lightroom, and Phase One’s Capture One Pro for some time — the data provided by ZEISS’ CP.3 and, especially, the production batch-specific data of the Supreme Primes, should provide welcome relief to a tedious pain point for VFX artists when doing virtual lens and camera modeling using today’s cutting-edge digital cinema cameras and compositing environments. A welcome addition to “smart” camera technology, we hope to see other manufacturers include support for XD in their current and future models.

For more information on how to work with XD — from camera to VFX production — be sure to check out the ZEISS eXtended Data resource page on their site.

AbelCine encourages comments on our blog posts, as long as they are relevant and respectful in tone. To further professional dialog, we strongly encourage the use of real names. We reserve the right to remove any comments that violate our comment policy.

AbelCine publishes this blog as a free educational resource, and anyone may read the discussions posted here. However, if you want to join the conversation, please log in or register on our site.

We use Disqus to manage comments on this blog. If you already have a Disqus account registered under the same email as your AbelCine account, you will automatically be logged in when you sign in to our site. If not, please create a free account with Disqus using the same email as your AbelCine account.